Forecasting sales from advertisement

1 About the Data

This data set contains statistics about the sales of a product in 200

different markets together with advertising budgets in each of these

markets for different media channels: TV,

Radio, Newspaper. The sales are in thousands

of units and the budget is in thousands of dollars.

2 Problem Statement

In this report we want to build a model that will predict sales as accurately as possible.

3 Importing and Preparing the Data

library(dplyr)

library(tidyverse)

library(knitr)

library(ggplot2)

library(caTools)

library(corrplot)advertising<-read_csv("advertising.csv")

rmarkdown::paged_table(advertising)3.1 Splitting the Data Set into the Training Set and Test Set

split=sample.split(advertising$Sales,SplitRatio = 2/3)3.1.1 Training Set

TrainingSet=subset(advertising,split==TRUE)3.1.2 Test Set

TestSet=subset(advertising,split==FALSE)3.2 Exploratory Analysis on the Training Data

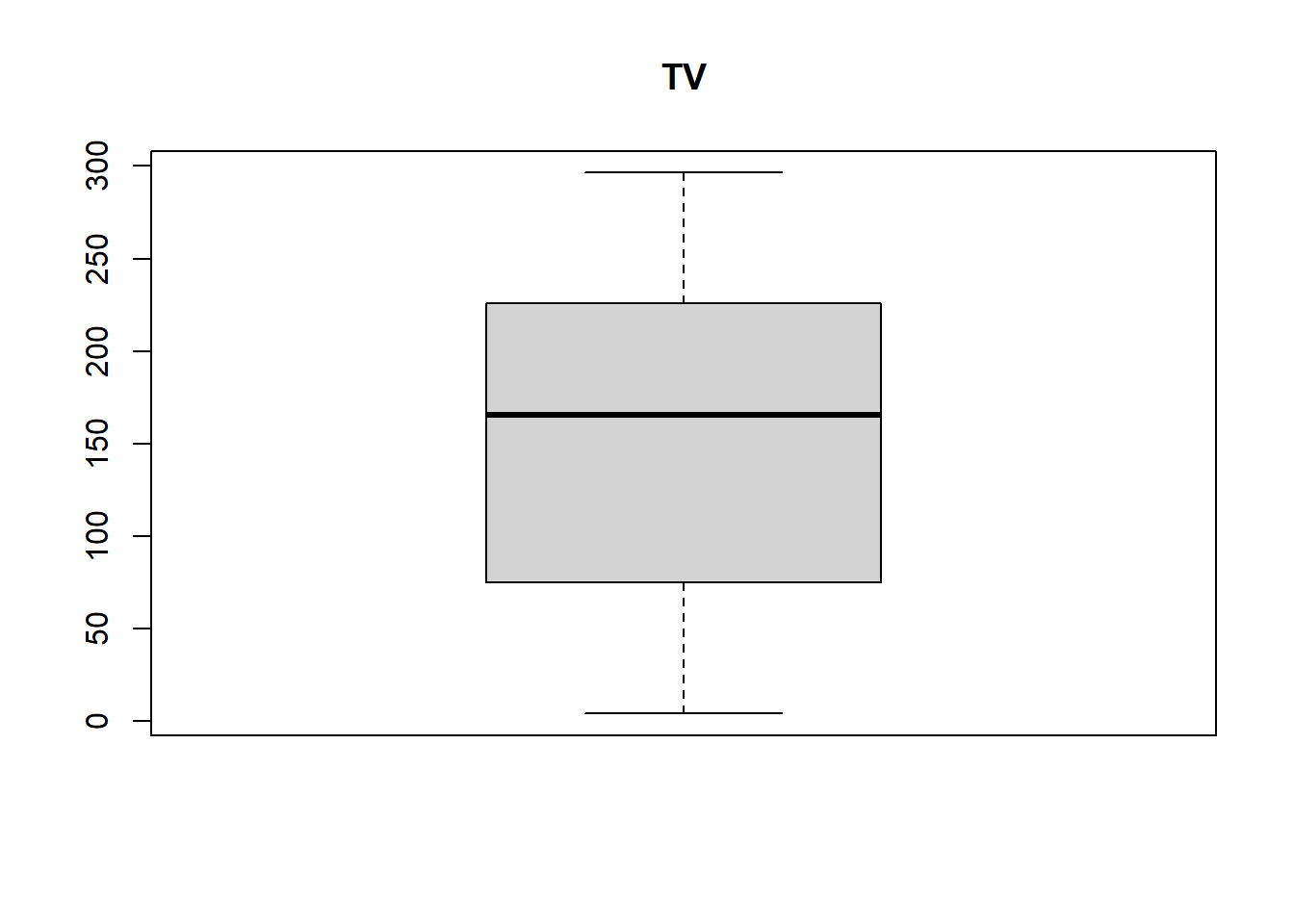

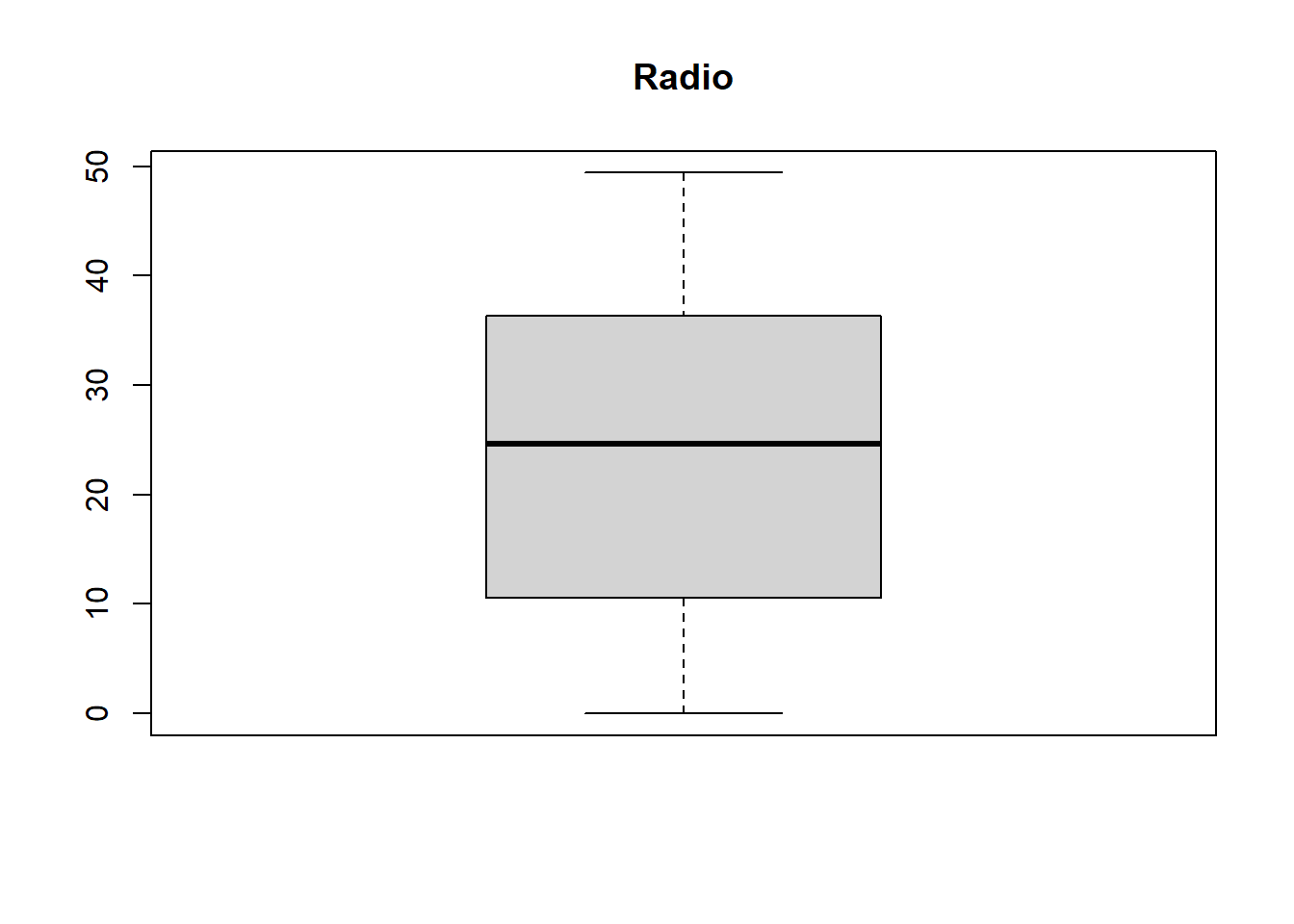

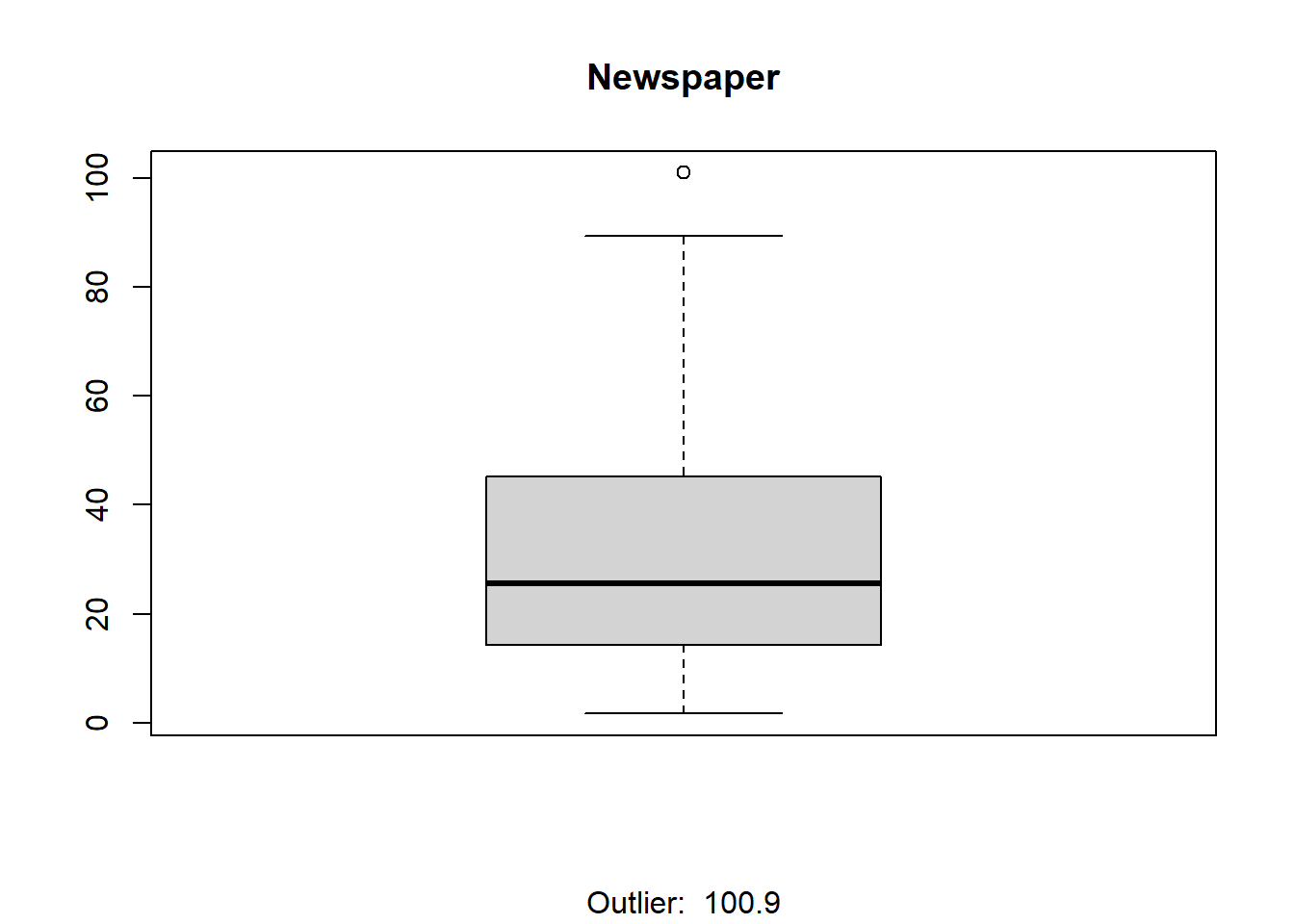

3.2.1 Box Plot

We’re going to use a box plot to provide indication of the data’s symmetry and skewness and to detect any outliers.

boxplot(TrainingSet$Sales,main="Sales",sub=paste(boxplot.stats(TrainingSet$Sales)$out))

boxplot(TrainingSet$TV,main="TV",sub=paste(boxplot.stats(TrainingSet$TV)$out))

boxplot(TrainingSet$Radio,main="Radio",sub=paste( boxplot.stats(TrainingSet$Radio)$out))

boxplot(TrainingSet$Newspaper,main="Newspaper",sub=paste('Outlier: ',boxplot.stats(TrainingSet$Newspaper)$out))

From the plots above it seems only Newspaper has

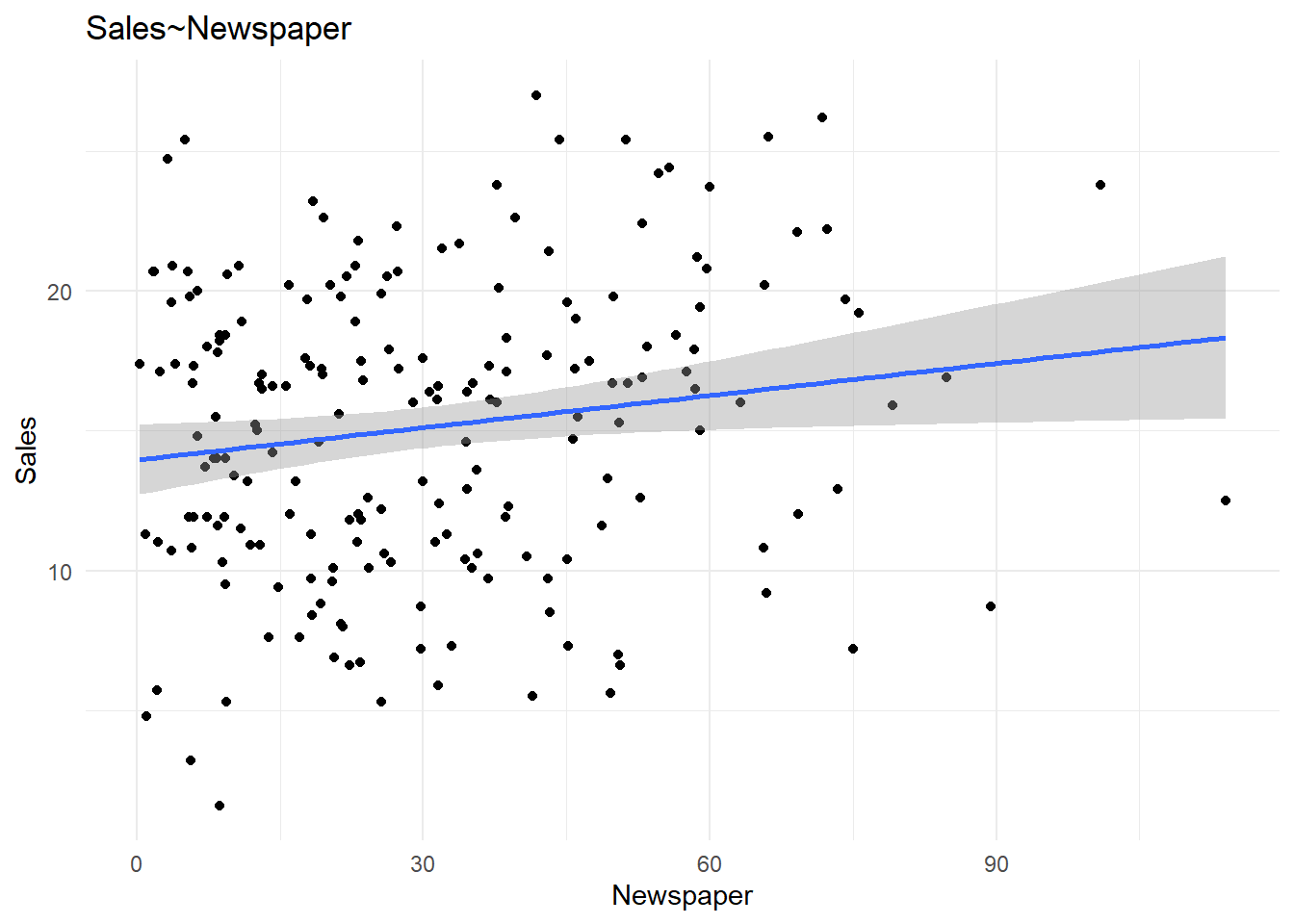

outliers. Lets’ view a scatter plot to see if the outlier has any effect

on it’s relationship with Sales.

ggplot(advertising,aes(x=Newspaper,y=Sales))+geom_point()+geom_smooth(method = 'lm')+labs(title="Sales~Newspaper")+theme_minimal()

The outlier seems to have no significance so we move on.

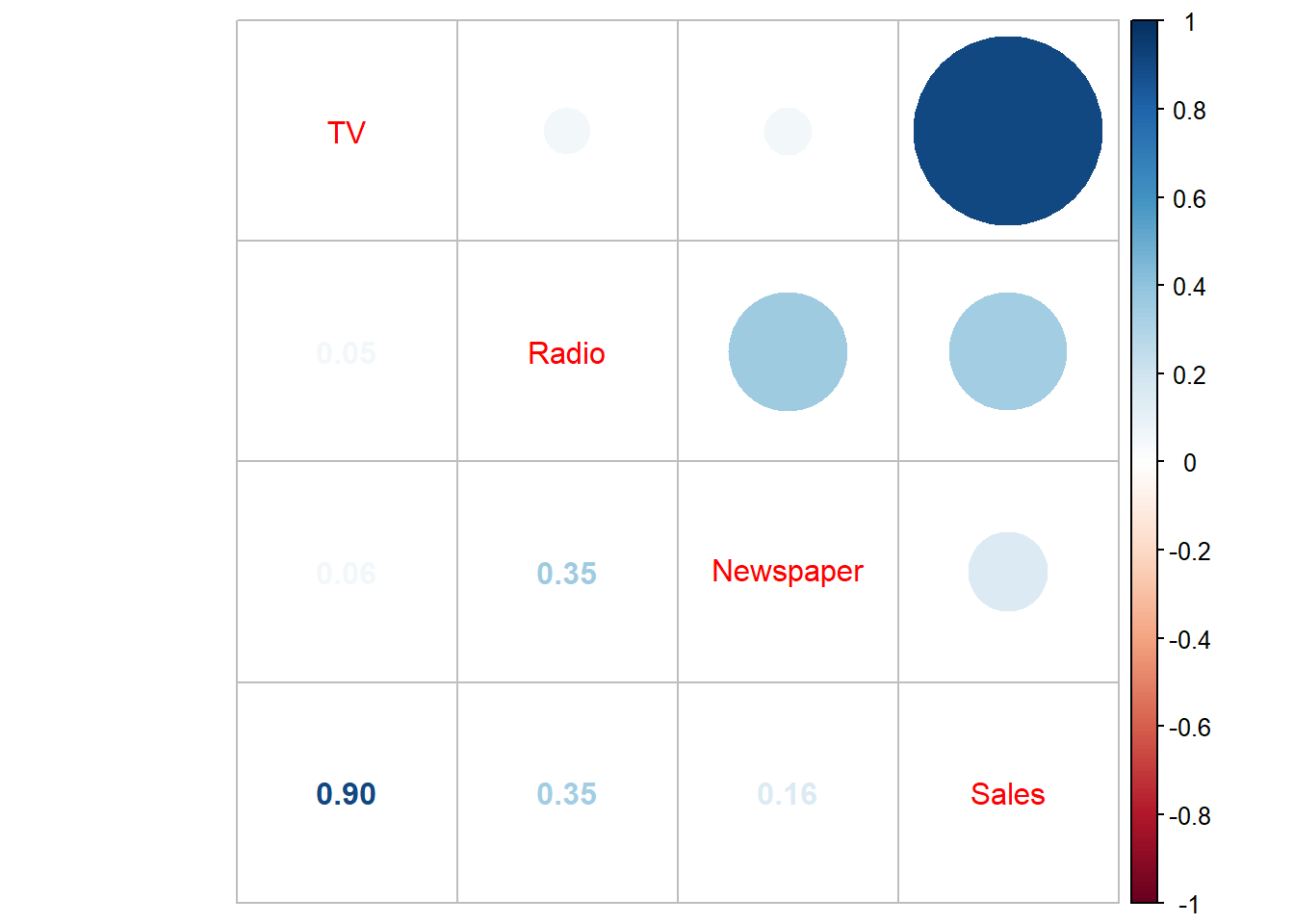

3.2.2 Correlation Plot

cor.matrix<-cor(advertising)

corrplot.mixed(cor.matrix)

There seems to be a significant correlation between

Sales and TV as well as Sales and

Radio, but not so much with Sales and

Newspaper. We could decide to drop it but we’ll check to

see if there are any interaction effects between

Newspaper and any other predictor variable.

NB The main effect is the effect of one of the predictor variables on the response variable. An interaction effect on the other hand occurs if there is an interaction between the predictor variables that affect the outcome of the response variables.

4 Building the Regression Model

model1<-lm(Sales~TV+Radio+Newspaper,data=TrainingSet)

model1$coefficients (Intercept) TV Radio Newspaper

4.684724428 0.052992222 0.122134988 -0.003953022 5 Evaluating Model Performance

summary(model1)

Call:

lm(formula = Sales ~ TV + Radio + Newspaper, data = TrainingSet)

Residuals:

Min 1Q Median 3Q Max

-5.0111 -0.9788 -0.0445 0.9187 3.7998

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 4.684724 0.358478 13.068 <2e-16 ***

TV 0.052992 0.001588 33.377 <2e-16 ***

Radio 0.122135 0.010064 12.136 <2e-16 ***

Newspaper -0.003953 0.007017 -0.563 0.574

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 1.599 on 129 degrees of freedom

Multiple R-squared: 0.9145, Adjusted R-squared: 0.9125

F-statistic: 460 on 3 and 129 DF, p-value: < 2.2e-16Here, the Residual standard error is 1.546.

The adjusted r-squared value measures how much of the variation in the response variable is explained by the set of predictors. In this case 91.95% of the variation in

Salesis explained by the set of predictors. But this does not necessarily mean that the model will predict new data accurately.

Let’s take a look at the model accuracy.

pred.model1=predict(model1,newdata = TestSet)

accuracy1=data.frame(cbind(actual=TestSet$Sales,predicted=pred.model1))

cor1=cor(accuracy1)

cor1[1,2][1] 0.9293508Our model predicted our test data with an accuracy of 93.05% .It seems our model is good for predicting but it wouldn’t hurt to improve our prediction accuracy.

6 Improving Model Performance: Accounting For Interaction Effects

model2<-lm(Sales~(TV+Radio+Newspaper)^2,data=TrainingSet)

summary(model2)

Call:

lm(formula = Sales ~ (TV + Radio + Newspaper)^2, data = TrainingSet)

Residuals:

Min 1Q Median 3Q Max

-4.3379 -0.8876 -0.0782 0.8848 3.4963

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 6.479e+00 6.333e-01 10.229 < 2e-16 ***

TV 4.304e-02 3.160e-03 13.622 < 2e-16 ***

Radio 4.618e-02 2.276e-02 2.029 0.044533 *

Newspaper -1.452e-02 2.125e-02 -0.683 0.495754

TV:Radio 4.125e-04 1.164e-04 3.544 0.000553 ***

TV:Newspaper -3.175e-06 7.206e-05 -0.044 0.964928

Radio:Newspaper 3.816e-04 4.715e-04 0.809 0.419887

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 1.516 on 126 degrees of freedom

Multiple R-squared: 0.925, Adjusted R-squared: 0.9214

F-statistic: 259 on 6 and 126 DF, p-value: < 2.2e-16As expected there are some interaction effects. Here, between

TVandRadio.Also, we see that the Residuals standard error is 1.511. This is an improvement from our previous model.

The adjusted r-squared is 0.9192 Therefore in this case 93% of the variation in

Salesis explained by the set of predictors. This is also an improvement from our previous model.

Let’s take a look at the model accuracy.

pred.model2=predict(model2,newdata = TestSet)

accuracy2=data.frame(cbind(actual=TestSet$Sales,predicted=pred.model2))

cor2=cor(accuracy2)

cor2[1,2][1] 0.9390918In our improved model, the model accuracy is 94.16%, not quite a big improvement from our previous model, but an improvement nonetheless.

7 Summary of Our Two Models: The Original and Improved

We compare the two models

\[Original\ model: Sales \approx \beta_0+\beta_1\cdot TV + \beta_2 \cdot Radio + \beta_3 \cdot Newspaper\] \[Improved\ model: Sales \approx \beta_0+\beta_1 \cdot TV+\beta_2 \cdot Radio+ \beta_3 \cdot Newspaper+\\\beta_4\cdot (TV \cdot Radio)+ \beta_5 \cdot(TV \cdot Newspaper)+ \beta_6 \cdot (Radio \cdot Nespaper)\]

RSE<-c(summary(model1)$sigma,summary(model2)$sigma)

Rsquared<-c(summary(model1)$r.squared,summary(model2)$r.squared)

prediction.accuracy<-c(cor1[1,2],cor2[1,2])

overview<-data.frame(RSE,Rsquared,prediction.accuracy)

rownames(overview)<-c("Original model","Improved model")

kable(t(overview))| Original model | Improved model | |

|---|---|---|

| RSE | 1.5993414 | 1.5157917 |

| Rsquared | 0.9145195 | 0.9250029 |

| prediction.accuracy | 0.9293508 | 0.9390918 |